By Graydon Kupfer

The release of OpenAI’s ChatGPT program late last year has made waves throughout writers’ circles and has gotten educators of all kinds talking. Some are more concerned than others, but the general sentiment is that the program has the potential to change professional and educational writing forever.

This article will explore three interesting points of contention with Artificial Intelligence and ChatGPT. The first point is the comparison between writing done by humans and writing done by AI. Second, the article will question the role of AI within an educational setting. Finally, it will ask and answer questions surrounding the ethics of using AI.

One of the biggest questions regarding ChatGPT and writing done by artificial intelligence is how it compares to that which is done manually by humans. How easy is it to tell the difference? Reading a lot of ChatGPT’s responses to prompts will make it clear that there are certain characteristics within its responses that could indicate that they were written by AI.

A New Point of View

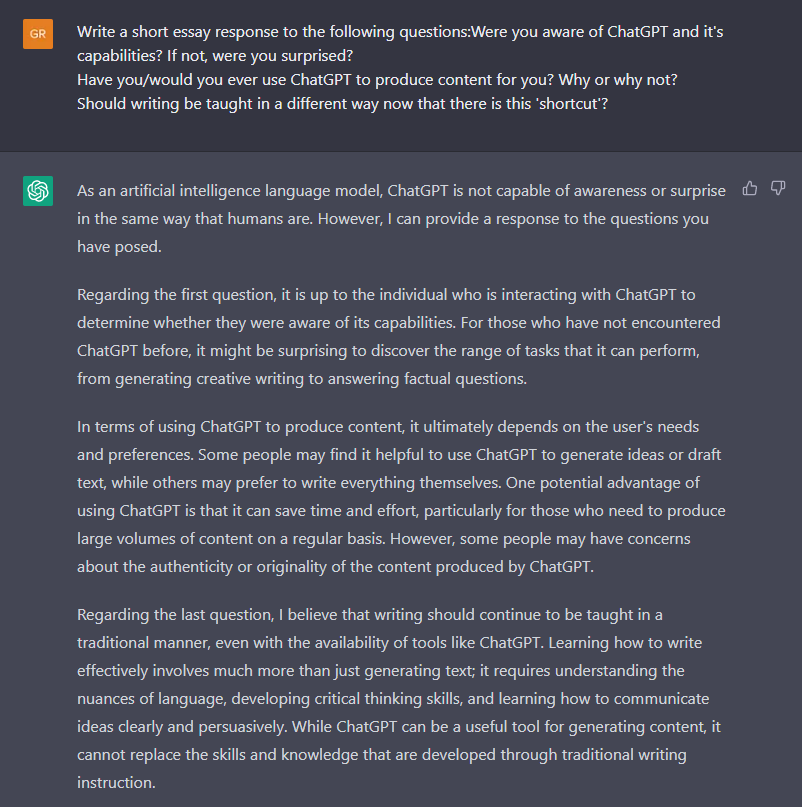

I recently asked ChatGPT to answer a set of questions about itself, and in its response the program referred to itself in first person multiple times. It is quirks like these in AI writing that might still need to be fixed manually in order for it to be passed off as done by a human. I conducted this experiment this a couple of weeks ago at the time of this writing. However, I decided to do it again specifically for this article. What is interesting is that not only was the response worded differently, but ChatGPT did not refer to itself in first person this time. Perhaps the program was updated in the time since the first response to appear more human-like when writing about itself.

Sing me a Song

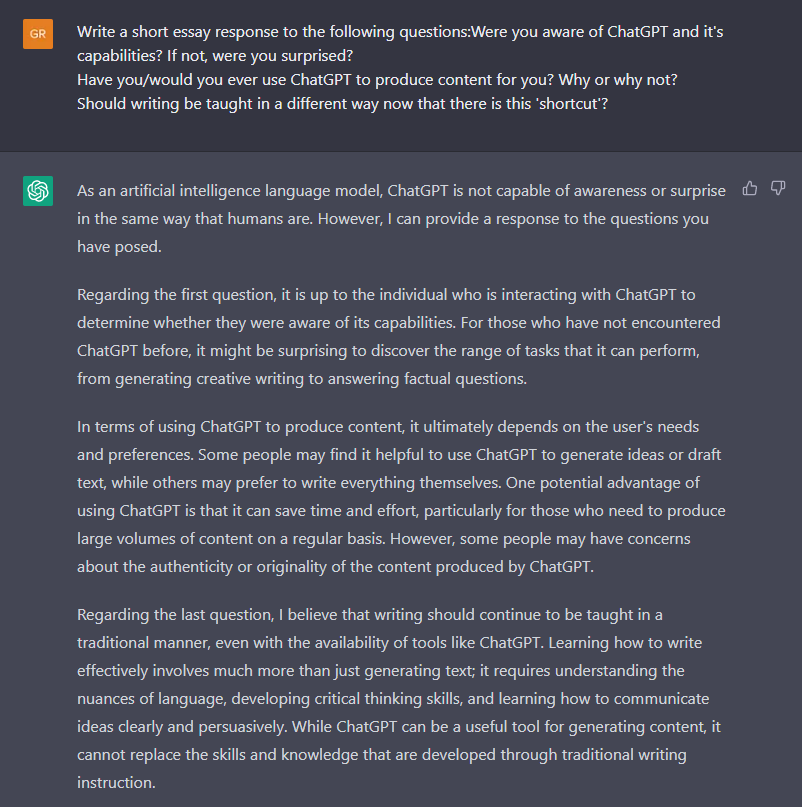

Another noticeable trait of AI writing is that it tends to be rather generic or vague when writing fictional content or interpreting nonfiction content. As an example, here are lyrics that ChatGPT wrote when asked to “Write a song protesting the Vietnam War.”

Compare this to the song “Fortunate Son” by Creedence Clearwater Revival, a song well known for being a response to the Vietnam War. The word choice of “Fortunate Son” is much more unique and colorful compared to ChatGPT’s lyrics. The lyrics of “Fortunate Son” reference another song, “Hail to the Chief,'' and use the metaphor of someone having a silver spoon, which means that said person is privileged. ChatGPT’s song, on the other hand, has no unique word usage and is fairly generic. There are no references to America or Vietnam in the lyrics, whereas “Fortunate Son” indicates the United States’ involvement multiple times through references to the American flag. ChatGPT’s song could probably be applied to any war of the 20th century. Even when a specific genre was included in the prompt, the lyrics written were still rather generic and non-referential.

Everyone Writes Differently

Some have argued that the writing ChatGPT produces is indistinguishable from that of a human. This might be the case in a general sense, but I do not think it is completely true. As peers read more and more of each other’s writing in school or work environments, I think that they get a better sense of each of their colleagues’ unique writing styles. Professors who pay attention to and become familiar enough with the work of their students will likely notice patterns and unique characteristics within the writing of each individual. Once they read enough of a certain student’s writing, they will know if something is not written by said student. Of course, one could also argue that reading the papers and essays of so many students in a row will cause them to easily blend together and become less distinct. Perhaps whether or not a student is able to successfully pass off an AI written paper as their own work depends on how unique their writing style is.

Academic Potential?

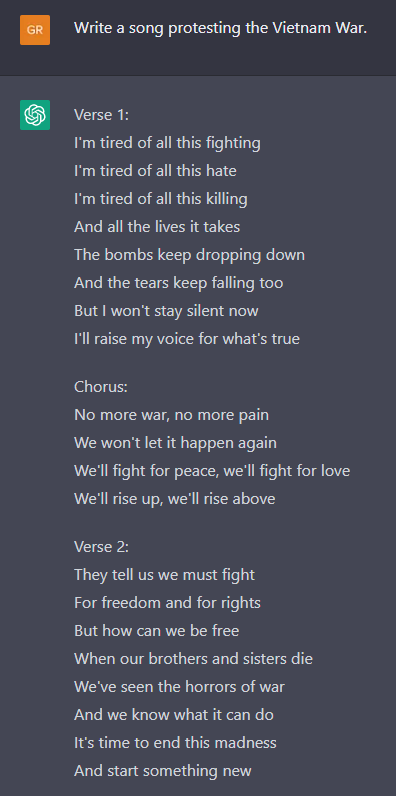

Not all educators have had the same reactions to the emergence of ChatGPT. The program has piqued the curiosity of some more than others. Kara Yorio, a writer for The School Library Journal, decided to ask ChatGPT itself how school librarians could best utilize the program. It responded with a list of possibilities, while also acknowledging the program’s limitations. I decided to do the same, and asked “How can ChatGPT be best used by students?”

One of the items on this list that raises a red flag is “Learning new concepts.” Those who have questioned ChatGPT extensively might know that it is not always the most accurate source of information. The program’s knowledge is vast, but it is not exactly the most credible source. Many people across the internet in both professional environments and on social media have expressed concerns about the truth and intention behind ChatGPT’s words. It is such an issue that an archive has been created for the purpose of documenting the program’s failures to do what it was supposed to, which includes factual inaccuracies among other errors. Said archive is already quite large, and it will continue to grow unless OpenAI is able to correct their program’s mistakes.

Not Quite a Calculator

In an article written for The Atlantic, high school humanities teacher Daniel Herman proposed the idea that ChatGPT is to writing what a calculator is to math. Just as a calculator is a machine that can do arithmetic, AI can now be used to produce writing that is at least competent in the eyes of educators, if not exemplary. The problem with this analogy is that mathematics is much more objective than writing. There tends to only be one correct answer to a math problem. However, a writing prompt can potentially have an endless amount of different responses, depending on how complex it is. Two students will obtain the same number when correctly solving for x, while their responses to an essay question will not be the exact same (assuming that they are not copying each other).

While ChatGPT and other AIs may not be acceptable learning tools in quite the same way as calculators are, they still have a lot of potential in education. Numbers one, two and four on the list above (“generating ideas,” “improving writing skills,” and “collaboration”) are options that could be adapted into an educational setting in some form or another. Even so, they will still certainly raise questions among educators regarding the ethics of using AI for professional work. This is what will be addressed in the next section.

The other entry on the list above that could be an issue is the last one: “time-saving.” Here, ChatGPT suggests that the program could be used to generate “full pieces of writing.” What exactly it means by this is unclear, but this suggestion sounds dangerously close to what some educational institutions might consider to be cheating. What is also worth noting is that right below this is a clarification from ChatGPT that it should not be the only resource students use for writing or research.

What if Writing Isn’t for You?

One of the biggest debates surrounding artificial intelligence is how ethical it is to use the technology. There is nothing wrong with using it for fun, of course, but what about using it in a professional environment? If someone is not skilled at writing or does not enjoy it, should it be acceptable for them to use ChatGPT to do the work for them? Say someone is developing a video game. They are good at art and programming, but poor at writing. However, their game still needs a suitable story and setting. If they do not want to hire someone else to do it, should it be okay for them to have ChatGPT or another AI write the story and dialogue for them? I do not think it would be such a big deal, but it is also worth considering that the creator could want to sell the game. One could argue that they would be making a profit off of work that isn’t theirs, even if the AI generated content is only a small portion of the overall product.

I have pondered a similar question about AI-made art. I consider myself to be at least competent at writing both fiction and nonfiction, but I do not think of myself as a skilled artist. AI-generated artwork, while not perfect, has been steadily improving over the past several years. Would it be such a bad thing to produce art using AI and use it in my own personal projects? It is a pretty complicated decision, as I am not stealing someone else’s art, but it also isn’t really mine and was not made by me. The same can be applied to the work done by ChatGPT.

What About Plagiarizing?

Is the use of an AI like ChatGPT considered plagiarism? To decide this, we should consider what exactly it means to plagiarize something. Merriam-Webster has two definitions of the word plagiarize:

“To steal and pass off (the ideas or words of another) as one's own; use (another's production) without crediting the source”

“To commit literary theft; present as new and original an idea or product derived from an existing source”

Applying these definitions to ChatGPT is a bit complicated, as the program generates its text based on a vast array of resources and databases from across the internet. ChatGPT does not credit these sources, but it does not claim that the ideas that it presents are its own, either. Going by the definitions provided by Merriam-Webster, it seems as if ChatGPT itself might be committing plagiarism with every response it generates. This could make using ChatGPT for professional research an act of plagiarism by extension. Until this situation is sorted out by lawmakers, whether or not AI writing is plagiarism depends on one’s own interpretation.

Let’s Ask the AI!

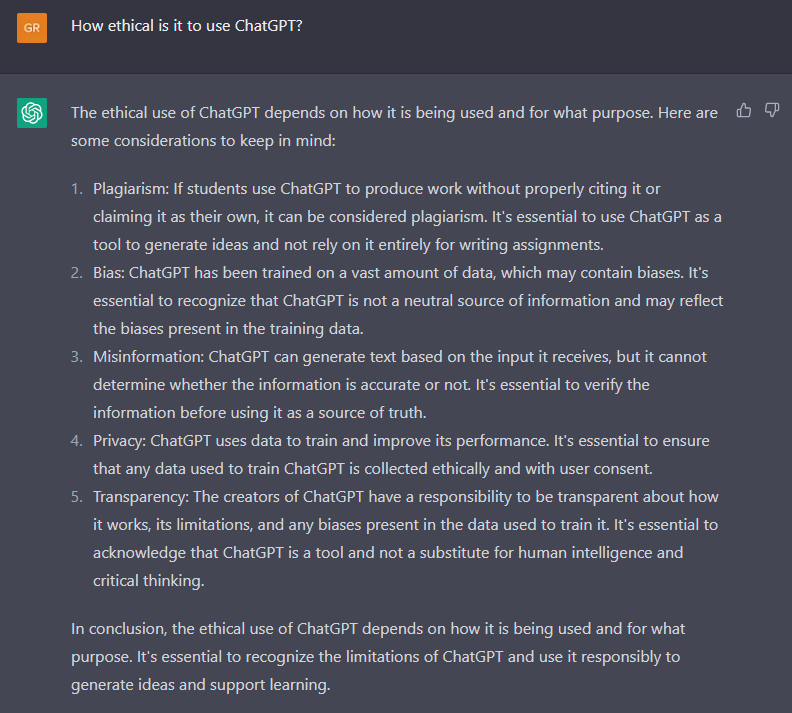

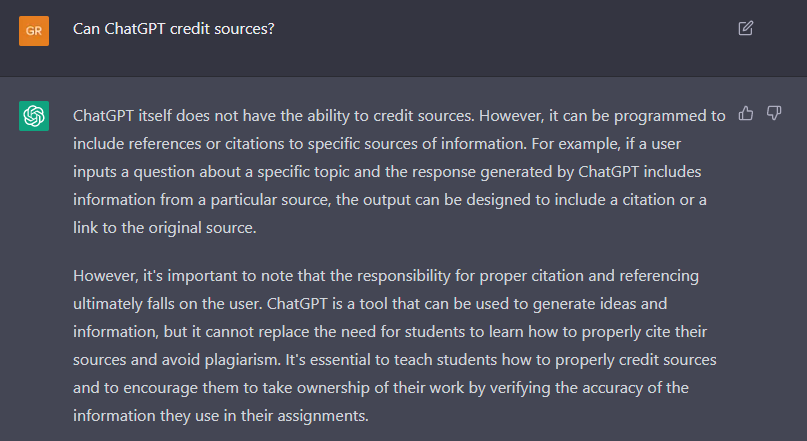

Perhaps the only information that ChatGPT is pulling from its own databases is information about itself. Just like with the other two points, I questioned ChatGPT about itself, this time in regards to its credibility and ethical usage. I asked “How ethical is it to use ChatGPT?” and “Can ChatGPT credit sources?”

Here, the program addresses many of its own flaws (including some not mentioned in this essay) and asks users to keep them in mind while using ChatGPT. This is reassuring, but does not definitively answer any of the ethics questions surrounding the program. The second response is a bit more interesting. This answer implies that ChatGPT can cite its sources, but will only do so upon request. Perhaps one day it will do this automatically, and in doing so it will become a more viable academic resource.

It is understandable why writers of all kinds would be curious and/or concerned about ChatGPT. The rapid advancement of artificial intelligence as a whole is both fascinating and possibly alarming. Will there come a day when writing done by AI can have just as much personality and genuine emotion as human writing can? To what extent will AI be integrated into education in the coming years? How will our government approach text generating AI programs in terms of their ethics and legality?

After essentially interviewing ChatGPT about itself for the past few hours, I would say that those interested in its capabilities should continue to experiment with the program. However, they should perhaps hold off on using artificial intelligence academically or professionally until all of the questions surrounding it have more concrete answers.

Borji, A. (2023, March 6). A categorical archive of CHATGPT failures. arXiv.org. https://arxiv.org/abs/2302.03494

Creedence Clearwater Revival – Fortunate Son. Genius. (n.d.). https://genius.com/Creedence-clearwater-revival-fortunate-son-lyrics

Herman, D. (2022, December 16). The End of High-School English. The Atlantic. https://www.theatlantic.com/technology/archive/2022/12/openai-chatgpt-writing-high-school-english-essay/672412/

Merriam-Webster. (n.d.). Plagiarize definition & meaning. Merriam-Webster. https://www.merriam-webster.com/dictionary/plagiarize

Yorio, K. (2023). The ChatGPT Revolution: School Librarians Explore New AI Technology. Will It Dramatically Change Education? School Library Journal, 69(2), 10. https://ezaccess.libraries.psu.edu/login?qurl=https%3A%2F%2Fwww.proquest.com%2Ftrade-journals%2Fchatgpt-revolution%2Fdocview%2F2771102402%2Fse-2%3Faccountid%3D13158